GPT-Image-1 API: A Step-by-Step Guide With Examples

Learn how to generate and edit images with the GPT-Image-1 API thought YEScale, including setup, parameter usage, and practical examples like masking and combining multiple images.

Document at: GPT-IMAGE-1Generate Image by gpt-image-1

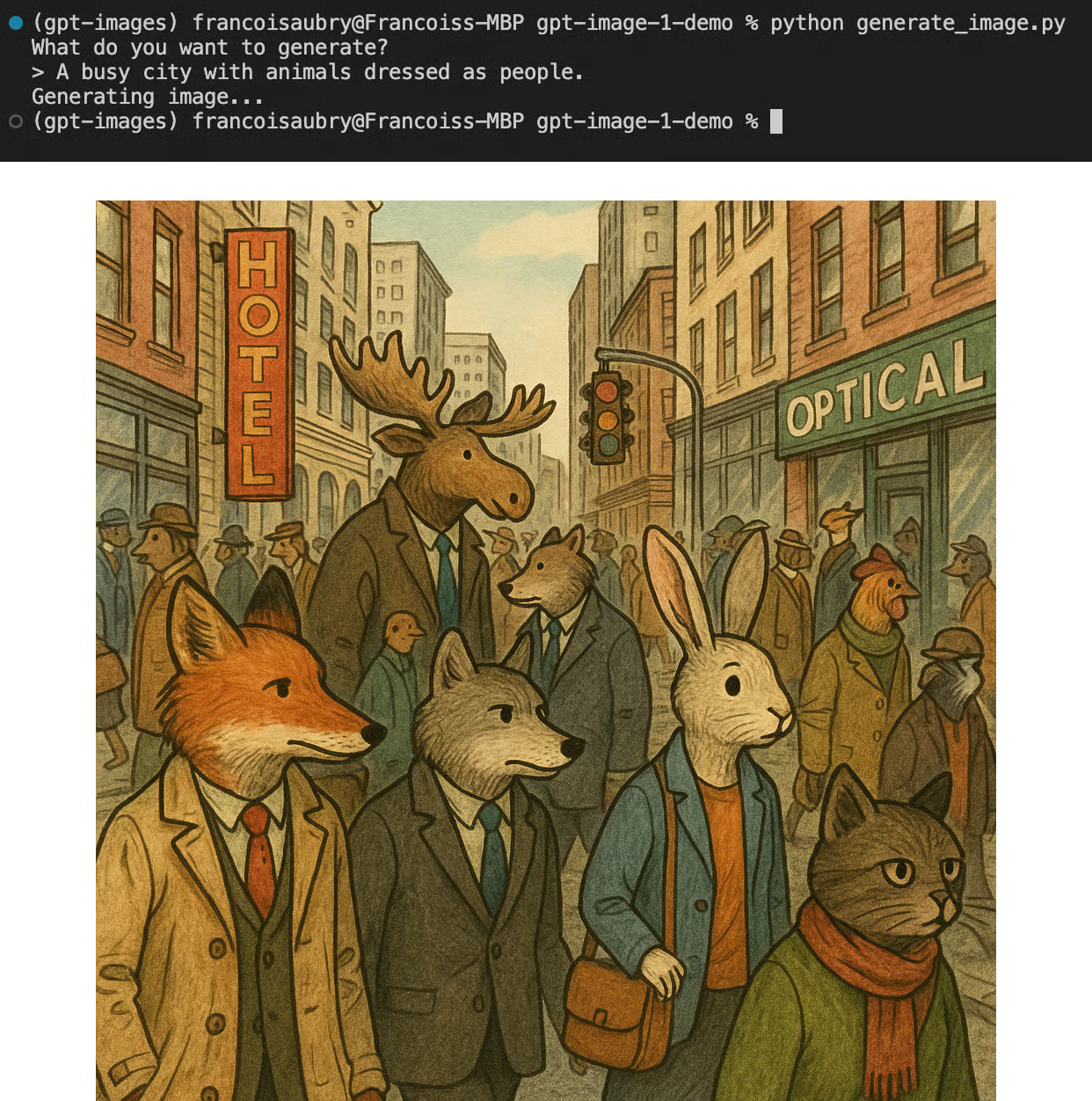

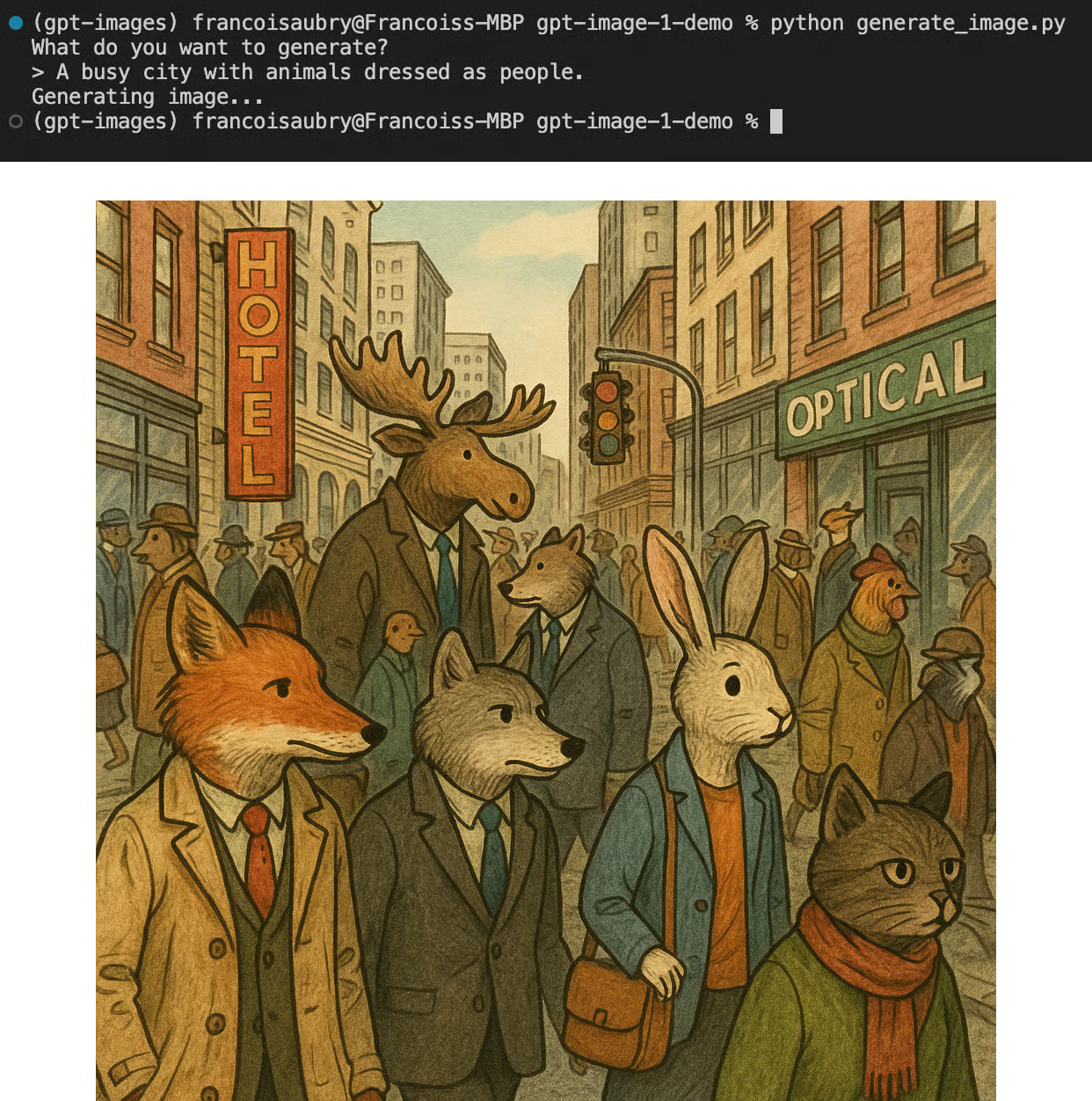

Generating Our First Image With GPT-Image-1#

Let's see how we can generate an image with gpt-image-1. Start by creating a new Python file, for example, generate_image.py, in the same folder as the .env file.Then, import the necessary packages:Note that os and base64 are built-in Python packages and don't need to be installed.Next, we load the API key and initialize the OpenAI client:Then, we ask the user to input a text prompt using the input() built-in function and send an image generation request to the API:Finally, we save the generated image into a file:The full script can be found here. To run this script, use the command:Here's an example with the output:

GPT-Image 1-parameters#

In this section, we describe the most relevant parameters of the gpt-image-1 model:prompt: The textual instruction that describes what image we want the model to generate.

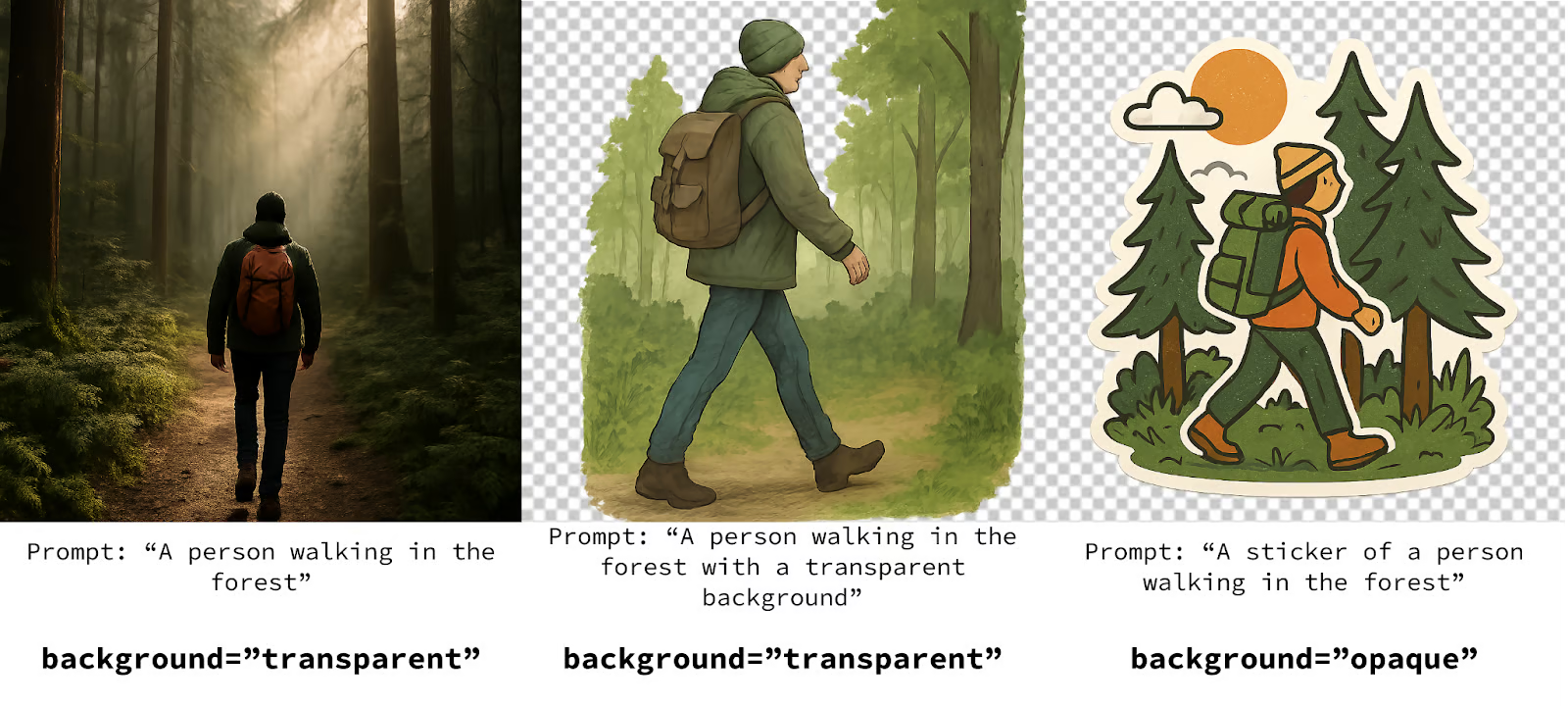

background: The type of background for the image. It must be one of "transparent", "opaque", or "auto". The default value is "auto", in which the model will decide based on the content what the best background type is. Note that JPEG images don't support transparent backgrounds.

n: The number of images to generate. Must be a number from 1 to 10.

quality: The quality of the generated image. It must be one of "high", "medium", or "low", with the default being "high".

size: The size of the image in pixels. It must be one of "1024x1024" (square), "1536x1024" (landscape), "1024x1536" (portrait).

moderation: The level of content moderation. Must be either "low" for a less restrictive filter or "auto", which is the default.

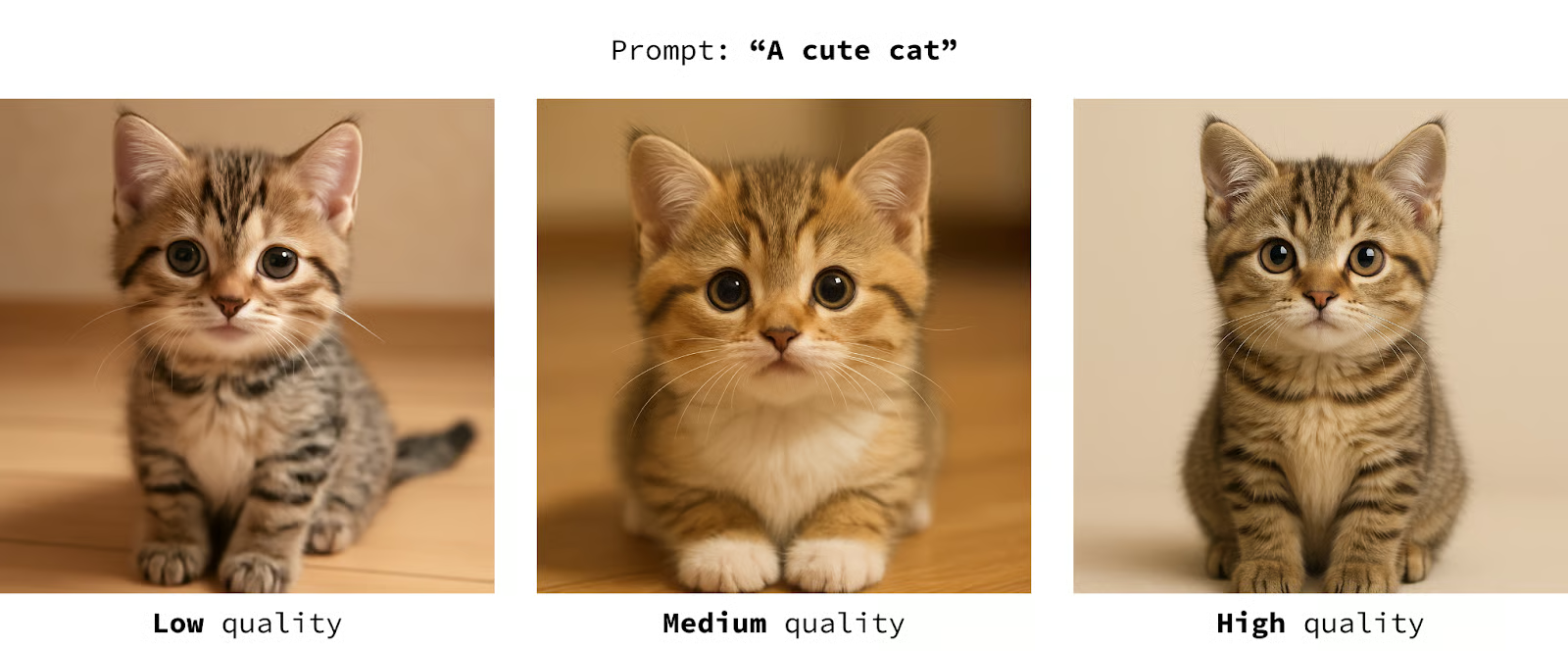

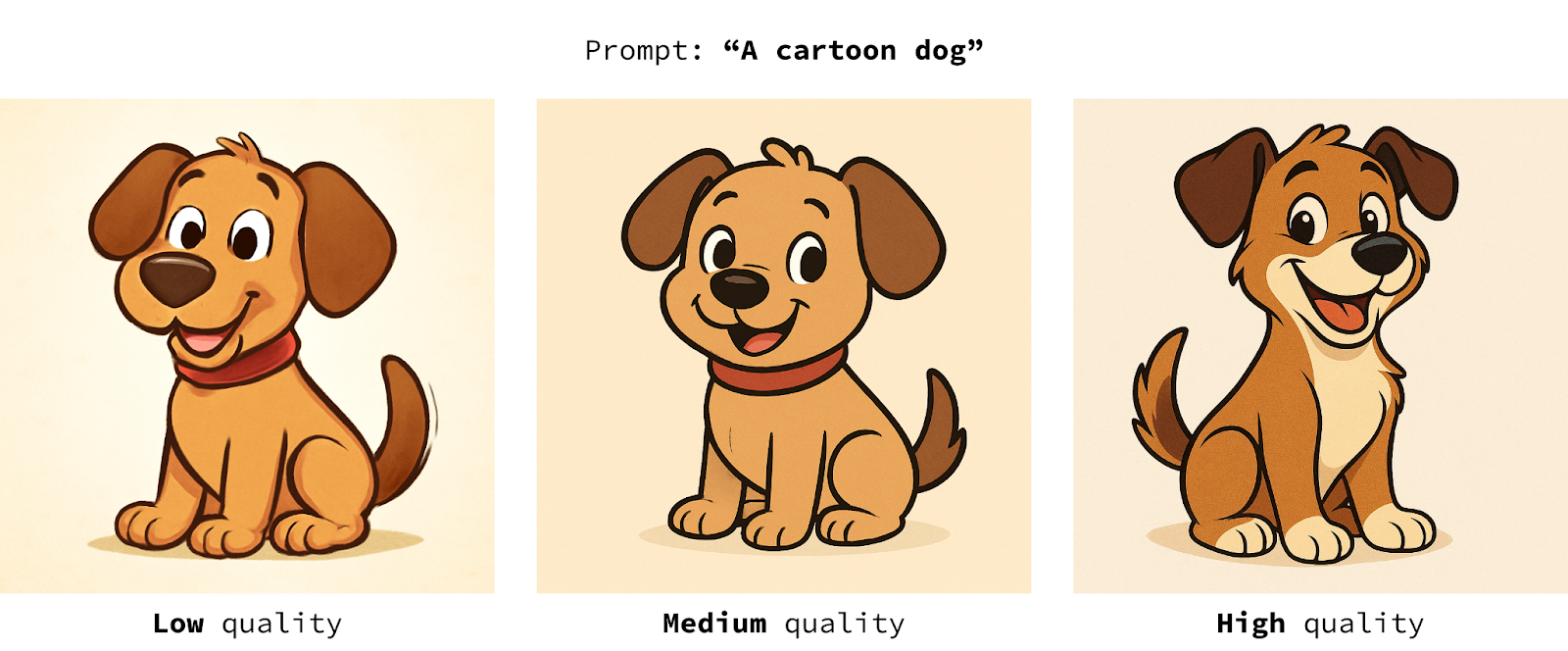

Effects of the quality parameter#

Here's a side-by-side comparison of images generated using the same prompt with different qualities:We see that the first cat (with the lowest quality) has some artifacts around the eyes, and that the image becomes significantly more realistic as the quality increases.Here's another example with a cartoon-style output:In this case, we also notice that the higher the quality, the more polished the image is. In my opinion, however, this is less relevant for content that's not supposed to be realistic.Remember that the higher the quality, the higher the cost and the computation time, so it's a good idea to figure out a good trade-off, depending on the use case. I'd recommend trying the parameters from low to high to see the minimum value that gives acceptable results for each use case.Background parameter#

In my experiments, I found that the model mostly ignored this parameter. Here are side-by-side examples where I varied the background parameters and the transparency instructions in the prompt:In the first example, the transparency parameter was ignored. In the second, I added the transparency instructions into the prompt, and it worked a little better. In the last, I asked for an opaque background but specified I wanted a sticker in the prompt and got a transparent background.I still recommend using the parameter to match what you want, but make sure to also specify the desired result in the prompt to reinforce it.Editing Images with GPT-Image-1#

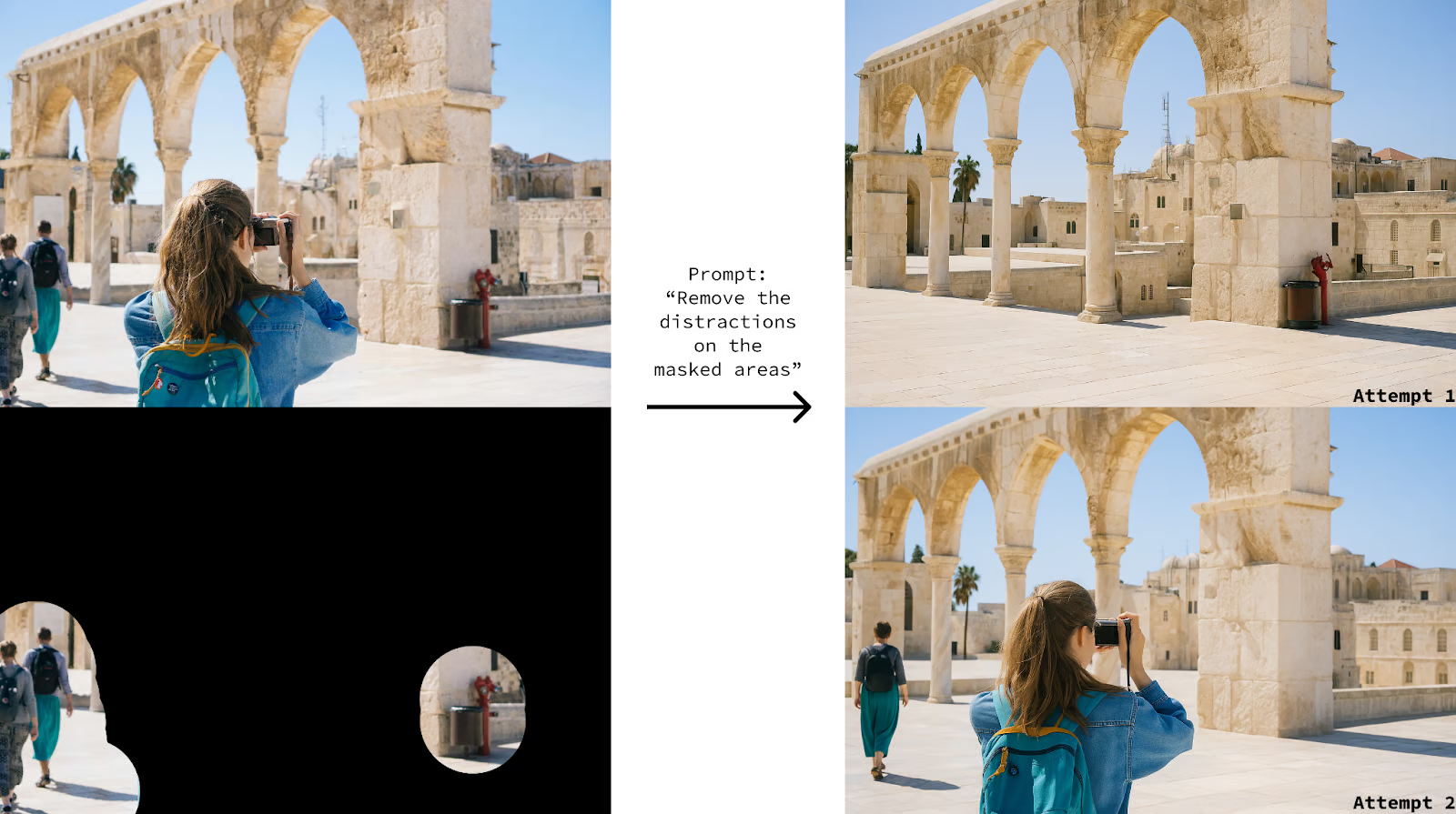

As I mentioned in the introduction, the most exciting part of GPT-Image-1 is its ability to edit images. Let's start by learning how to ask it to edit an image and then explore a few use cases.To edit images, most of the code can be reused. The only part that changes is that instead of using the client.images.generate() function, we use the client.images.edit() function.The new parameter is image. This is a list of input images to be used in the edit. In this case, we only provide one image named my-image.jpg located in the same folder as the script.Here's an example of using GPT Image 1 to edit one of my photos:Note that because the original image has a portrait ratio, I used the portrait size 1024x1536. However, this isn't the same ratio as the original image. Even in editing mode, the model can only output images in the three sizes specified above.Using masks#

Editing mode provides a mask parameter that we can use to specify the areas where the image should be edited. The mask must be a PNG image of at most 4 MB and have the same size as the image. Areas with 100% transparency correspond to the areas that GPT Image 1 is allowed to edit.We provide the mask in the same way as the image, except it isn't a list in this case:However, when I experimented with it, it didn't work very well, and I've seen reports online of people with similar issues.I've also tried using it to add elements at specific locations, and it didn't work consistently. Just like using the background parameter for image generation, I found that describing what I want in the prompt works best.Using multiple images#

The model can process and combine multiple images at once. In the example below, we use it to create a marketing poster combining the images of these three individual drinks:We provide the three images as a list in the image parameter, as follows:Modified at 2025-05-26 04:22:11